Architecting Trustworthy Artificial Intelligence in Medical Devices

11 Nov 2025

Minimizing Risk from Data Scarcity to Bias Mitigation

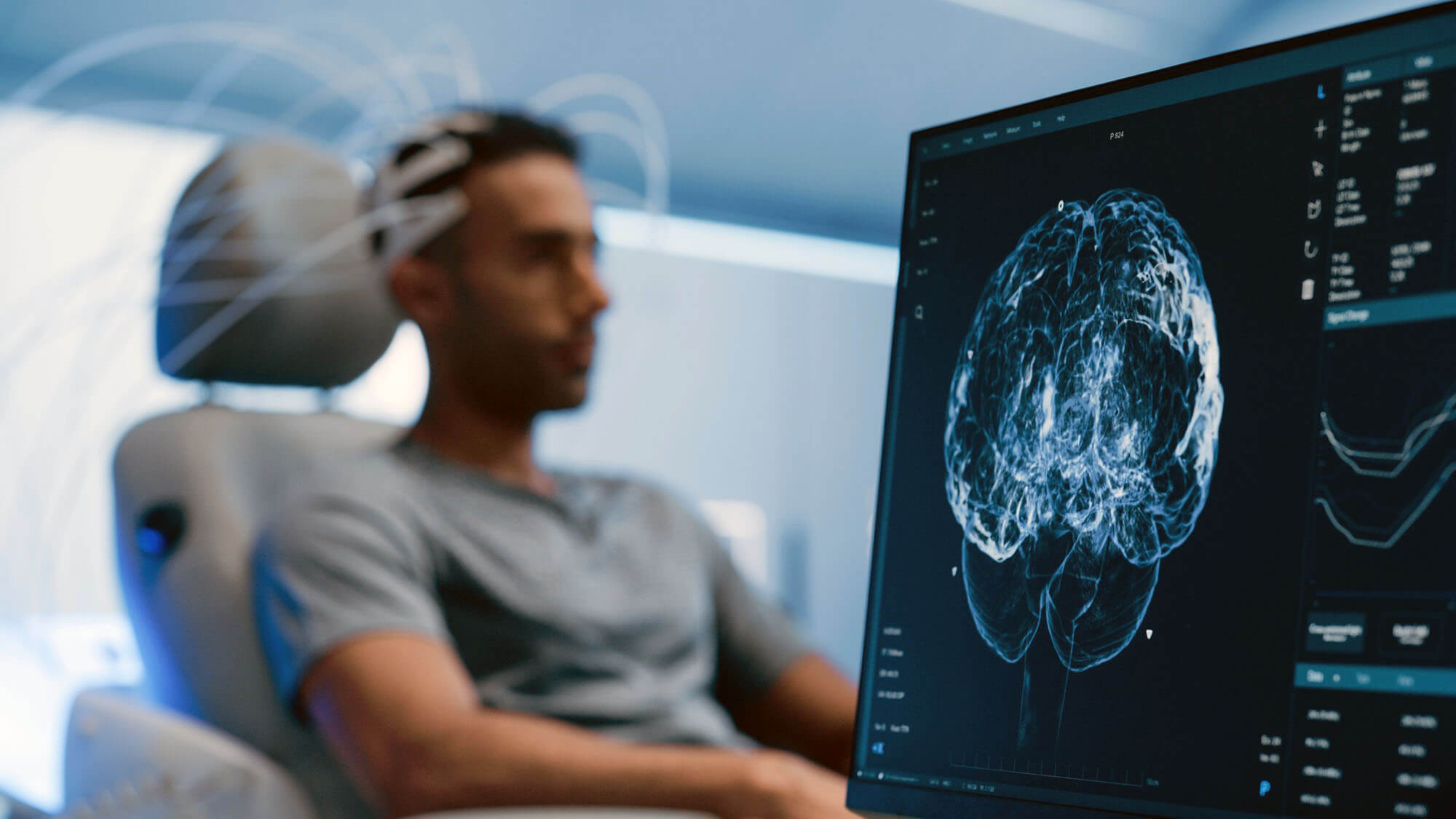

The integration of artificial intelligence (AI) in healthcare demands a uniquely rigorous approach, particularly when compared to other high-tech domains such as e-commerce or personalized recommendation systems. While these sectors require advanced machine learning and real-time decision-making, the healthcare environment introduces a distinct set of ethical, clinical, and operational challenges. In the medical domain, the margin for error is exceptionally narrow; decisions made by or assisted through AI systems can directly impact patient outcomes, clinical workflows, and even survival rates.

Developing AI for medical applications is not merely a technical exercise; it is an exercise in responsibility. Mispredictions, biased outputs, or instability in algorithmic performance can have severe consequences. As such, engineering AI in this space requires a balance of innovation and caution. It calls for algorithmic precision, but also demands a deep integration of domain knowledge, clinical context, and regulatory foresight.

One of the most critical factors in building reliable AI for healthcare is the quality, diversity, and representativeness of the underlying data. Unlike other sectors where data can often be generated at scale through controlled environments, medical data is inherently sensitive, highly variable, and often limited in volume. Furthermore, demographic imbalances such as overrepresentation of certain age groups, genders, or ethnicities in clinical data can introduce systemic biases into AI models. Addressing these challenges requires not only sophisticated data pre-processing techniques, such as smart sampling, augmentation and transfer learning, but also rigorous fairness audits and model interpretability measures.

Ultimately, building robust AI in the medical sector hinges on designing systems that are both technically sound and ethically grounded. This means prioritizing transparency, explainability, and ongoing validation in real-world clinical settings ensuring that AI does not just perform well in development environments but continues to perform safely and equitably once deployed.

Data Scarcity and Privacy: A Dual Challenge

AI thrives on data, but in the medical domain, data is both scarce and highly sensitive. Unlike other industries, where sensor logs can be generated at scale, clinical datasets are harder to come by due to privacy restrictions, regulatory oversight, and data silos. To counter this, we’re seeing the rise of federated learning, differential privacy, and homomorphic encryption in medtech AI workflows. These allow model training across multiple hospitals or care networks without sharing patient data, protecting privacy while enhancing model generalization.

Transfer learning is another pragmatic method pretraining models on large, generic medical datasets and fine-tuning them with smaller, specialized data from specific patient populations. We also leverage synthetic data generation and augmentation to increase diversity and reduce overfitting. But caution is warranted: not all synthetic data is created equal. If it fails to represent biological variability or inadvertently encodes bias, the results can be worse than training on limited real-world data.

Bias and Fairness: Built-in, Not Bolted On

Medical AI must perform equitably across demographics like age, race, gender, and other social determinants of health. This requires a fairness-by-design approach, including:

- Bias-aware data sampling during model development

- Fairness metrics (e.g., demographic parity, equalized odds) applied to both training and test datasets

- Stratified performance validation across subgroups

- Targeted mitigation techniques like re-weighting, re-sampling, or adversarial debiasing

These practices are fast becoming regulatory requirements, as seen in the EU AI Act and emerging guidance from bodies like the U.S. Food and Drug Administration (FDA) and the World Health Organization (WHO).

Model Selection: Matching Architecture to Application

AI models must be tailored to the data modality and use case. For example:

- Convolutional Neural Networks (CNNs) or transformers for diagnostic imaging

- Recurrent models (e.g., long short-term memory or LSTM) for time-series data like heart rate or insulin levels

- Decision tree ensembles for lab results or patient-reported outcomes

- LLMs and retrieval-augmented generation for summarizing clinician notes or aiding documentation

Each model class comes with its own risks and needs tailored methods for testing reliability and robustness. Often, systems deploy hybrid models combining imaging interpretation, signal processing, and natural language components. The challenge is integrating these models in a way that preserves trustworthiness, including performance, interpretability, and traceability.

Validation in the Real World

Regulatory approval is only the start. True trust in AI systems comes from real-world performance monitoring. Here's how we approach this:

- Split validation datasets (train/test/holdout) with stratified representation

- Cross-institution testing for generalization

- Shadow mode deployments, where models make decisions in parallel but without influencing care

- Controlled pilot rollouts with feedback loops from clinicians

- Continuous monitoring for model and data drift

- Trigger-based revalidation if performance drops or usage patterns change

This full-stack validation strategy mirrors what we used in automotive safety systems, where millions of scenarios were simulated or replayed before real-world deployment.

Industry Collaboration and the Road Ahead

We are reaching a point where cross-manufacturer, cross-hospital collaboration is critical. Shared datasets, especially diverse, anonymized, and well-labeled ones are essential to overcoming bias and scarcity. We are seeing early progress through academic-industry partnerships, public data consortiums, and medical AI benchmarking initiatives.

In the long term, the future of trustworthy AI in medical devices will rely on shared responsibility across manufacturers, regulators, clinicians, and data stewards. Transparency, interoperability, and validation at scale will be the pillars of progress.